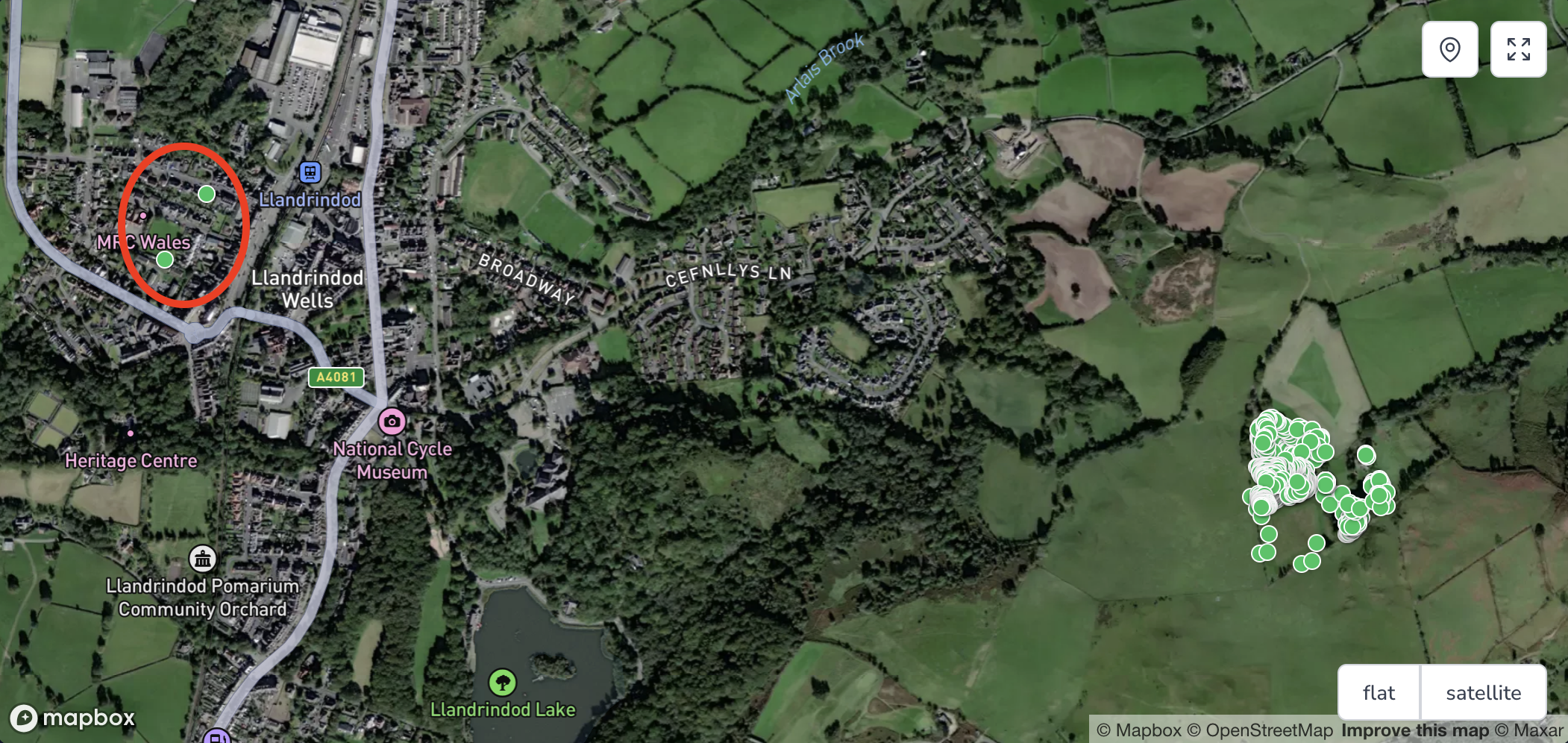

When building an API for reforestation charity Protect Earth, I needed a way to show all the trees we were planting on a map. Thanks to an iOS application we used to photograph trees, we could record the species, and use the GPS to record the location, so we knew exactly where all those trees were. As the climate & nature crises ramped up, so did the site of our projects, with sites now having 10,000 or even 20,000 trees planted at a site!

This growth is fantastic, but not if our software completely falls over. This broke our API because I never worked out how to paginate GeoJSON, and was too busy out in the field to mess around with it. Thankfully streaming has solved the problem perfectly, and it’s been a lot of fun to implement.

Use Case

It’s important to know where our trees have been planted so we can protect them, and also so we can make sure GPS hasn’t gone wonky and sent some off into the sea. I don’t want people having a certificate saying their tree is in one place if its actually miles from there. So if theres 20,000 pins to put on a map, we gotta put 20,000 pins on a map.

A /maps/parcel/abc endpoint outputs the GeoJSON, and for smaller sites this is fine. Those 20,000 sites struggle, first with zero pins showing up for almost 30 seconds, then the browser panics as it receives a huge payload and tries to render all of that at once.

I originally wondered about pagination. GeoJSON does not offer any standard pagination solution that I’m aware of, so trying to wedge it in with Links headers could have worked, but it feels weird to even try. Generally pagination is more of a user interface feature, allowing the optional fetching of as much of the collection as is needed for the user/client to get what they need, but its a laborious and flailing approach that feels more like long-polling than fetching a collection.

The problem here is that the sheer size of the collection is stressing the memory of both the server and client, because it has to dealing with all of it at once. Dealing with a stream of items would solve that memory issue, and we can do that with JSON Streaming.

JSON Streaming

Streaming is how the rest of the web works, and much like a large image is streamed for progressive processing of images, where content is streamed, with a low res quality showing quickly (the start of the stream) then as more data is loaded the image can load the higher quality representation a few chunks at a time.

For streaming generic JSON its the same idea. Putting chunks of JSON into the response so that the client can split it up. To avoid confusion over all the { } and [ ] brackets that would be split over various lines there are standards and conventions. One standard is RFC 7464: JSON Text Sequence, which not only pops a new JSON object on each line, but also requires special ASCII control characters to split them up so you can format them pretty or keep them in-line.

It should look like this:

{"timestamp": "1985-04-12T23:20:50.52Z", "level": 1, "message": "Hi!"}

{"timestamp": "1985-04-12T23:20:51.37Z", "level": 1, "message": "Hows it hangin?"}

{"timestamp": "1985-04-12T23:20:53.29Z", "level": 1, "message": "Bye!"}

With the ASCII control characters visualized it would actually look a bit like this:

0x1E{"timestamp": "1985-04-12T23:20:50.52Z", "level": 1, "message": "Hi!"}0x0A

0x1E{"timestamp": "1985-04-12T23:20:51.37Z", "level": 1, "message": "Hows it hangin?"}0x0A

0x1E{"timestamp": "1985-04-12T23:20:53.29Z", "level": 1, "message": "Bye!"}0x0A

Don’t worry, this will all start to make sense with some coming code samples.

GeoJSON Streaming

This is a post about GeoJSON, so let’s talk about that. Guess what, there’s another RFC that specifically talks about how to stream GeoJSON using JSON Text Sequence, and I bet you can guess the name: RFC 8142: GeoJSON Text Sequence.

It works the same way, but instead of returning a FeatureCollection, this is a stream of Feature objects, which is essentially a FeatureCollection right?

$ curl -XGET http://localhost/parcel/1 -i

HTTP/1.1 200 OK

Content-Type: application/geo+json-seq

Cache-Control: no-cache, private

Date: Tue, 09 Sep 2025 16:52:29 GMT

{"type":"Feature","geometry":{"type":"Point","coordinates":[-3.331792,50.997009]},"properties":{"type":"tree"}}

{"type":"Feature","geometry":{"type":"Point","coordinates":[-3.330440,50.996594]},"properties":{"type":"tree"}}

{"type":"Feature","geometry":{"type":"Point","coordinates":[-3.330896,50.996337]},"properties":{"type":"tree"}}

{"type":"Feature","geometry":{"type":"Point","coordinates":[-3.331937,50.996821]},"properties":{"type":"tree"}}

Creating a GeoJSON Stream

Alright! So how do we actually build this? DuckDuckGo is a little light on examples of this, so I had to forge my own path. Protect Earth runs on Laravel PHP so we’re going to use that as an example for producing GeoJSON streams, but the idea is the same in any language.

Here’s a working example of a route (which for Laravel fans is actually inside a Nova component but shouldn’t make too much difference).

<?php

use App\Models\Parcel;

use Illuminate\Support\Facades\Route;

use Symfony\Component\HttpFoundation\StreamedResponse;

Route::get('/parcel/{parcel}', function (Parcel $parcel): StreamedResponse {

// Instead of grabbing all units into memory, iterate through one at a time with a cursor

$cursor = $parcel->units()->cursor();

// Wrap this in a callback so it can run inside stream()

$callback = function () use ($parcel, $cursor) {

$count = 0;

foreach ($cursor as $unit) {

yield chr(0x1E).json_encode([

'type' => 'Feature',

'geometry' => $unit->coordinates->toArray(),

'properties' => [

'id' => $unit->id,

'type' => $unit->unit_type->value,

'species' => $unit->specie?->name,

],

]).char(0x0A);

if (0 === ++$count % 100) {

flush();

}

}

};

return response()->stream($callback, status: 200, headers: [

'Content-Type' => 'application/geo+json-seq',

]);

});

A few things here might feel a little weird, but it makes sense when we go through one bit at a time.

yield chr(0x1E).json_encode(

The yield is passing strings to response()->stream() which is using generators to stream the responses. This is all handled via Symfony\Component\HttpFoundation\StreamedResponse directly, instead of trying to use any of the Laravel streamed JSON stuff which has a few too many of its own conventions to give us the freedom to work with JSON Text Sequence properly.

The char() PHP function helps output ASCII characters and char(0x1E) is the hexadecimal representation of the ASCII Record Separator character (callback to RFC 7464: JSON Text Sequence).

The chr(0x0A) is another handy ASCII control character which pops a “Line Feed” character onto the end. That’s a bit more specific than the usual \n and/or \r sort of thing which can have various discrepancies across operating systems.

Together they delimit a start and end line, so you could even output fancy formatted JSON if you wanted, so long as the RS and LF characters were where they should be.

Then finally, in PHP it can help to flush the output buffer now and then to avoid memory leaking. This says to flush the output buffer every 100 records, because who cares, it’s already output.

if (0 === ++$count % 100) {

flush();

}

The only other slightly different bit is the Content-Type header.

return response()->stream($callback, status: 200, headers: [

'Content-Type' => 'application/geo+json-seq',

]);

Previously the content type header was application/geo+json which is basically json with a subtype of geo to let clients know it’s GeoJSON. Now it’s json-seq with the same subtype of geo to explain the response is GeoJSON Text Sequence.

Together that all looks like this.

The response is actually a lot quicker than it looks from the gif, because the HTTPie is having a rough time making it all look pretty, but you get the idea.

Any tooling that can handle JSON Text Sequence can handle this GeoJSON Text Sequence response.

Sadly not any tooling which can handle GeoJSON can handle GeoJSON Text Sequence.

Visualizing Streamed GeoJSON with Mapbox GL

Mapbox GL JS is perfectly happy to be pointed at GeoJSON. You give it a URL and it will go fetch the GeoJSON and pop it onto the map for you.

map.addSource('places', {

type: 'geojson',

data: 'http://localhost/maps/parcels/1',

});

To work with streamed data we sadly need to handle the stream ourselves. This boils down to creating an object to collect all features, then keep appending more data to that collection each time more streamed data comes in.

<div id="map"></div>

<script>

mapboxgl.accessToken = 'ACCESSTOKEN';

const map = new mapboxgl.Map({

container: 'map',

style: 'mapbox://styles/mapbox/standard-satellite',

center: [ -3.33089, 50.99645], // near the test site

zoom: 18

});

map.on('load', () => {

const featureCollection = {

type: 'FeatureCollection',

features: []

};

// Initial empty source

map.addSource('streamSource', {

type: 'geojson',

data: featureCollection

});

map.addLayer({

id: 'streamLayer',

type: 'circle',

source: 'streamSource',

paint: {

'circle-radius': 6,

'circle-color': '#e63946'

}

});

// Connect to streaming endpoint

fetch('http://localhost/parcel/1')

.then(res => {

const reader = res.body.getReader();

const decoder = new TextDecoder();

let buffer = '';

function read() {

return reader.read().then(({done, value}) => {

if (done) return;

buffer += decoder.decode(value, {stream:true});

let lines = buffer.split('\n');

buffer = lines.pop(); // keep partial line

for (const line of lines) {

if (!line.trim()) continue;

// Strip RS (0x1E) off the beginning

const clean = line.replace(/^\u001E/, '').trim();

try {

const feature = JSON.parse(clean);

if (feature.type === 'Feature') {

featureCollection.features.push(feature);

map.getSource('streamSource').setData(featureCollection);

}

} catch (e) {

console.error('Bad GeoJSON feature:', e, line);

}

}

return read();

});

}

return read();

});

});

</script>

A big chunk of code yes, but as always we can break it down to make it make sense.

map.on('load', () => {

const featureCollection = {

type: 'FeatureCollection',

features: []

};

// Initial empty source

map.addSource('streamSource', {

type: 'geojson',

data: featureCollection

});

Firstly seeing as Mapbox wants a FeatureCollection but the stream is returning only features, we can just emulate a non-streamed GeoJSON response by building our own FeatureCollection, and passing it straight in. This is empty, so we go fetch().

fetch('http://localhost/parcel/1')

.then(res => {

const reader = res.body.getReader();

const decoder = new TextDecoder();

Built-in HTTP client Fetch is handy, and as it returns a Promise we can use .then() (easier than using await in this example but either way.)

Normally you would use response.json() to get the JSON body, and guess what? That’s asynchronous too, meaning you’d need to use .then() or await, because it’s waiting for the whole response, but we want direct access to the stream. Using res.body.getReader() and getting a TextDecoder ready will help make this happen.

let buffer = '';

function read() {

return reader.read().then(({done, value}) => {

if (done) return;

buffer += decoder.decode(value, {stream:true});

let lines = buffer.split('\n');

buffer = lines.pop(); // keep partial line

Start up a buffer string as empty, then we can start reading the stream from the reader. If the reader is done, then give up, that’s the end of the response. If not, carry on! Decode the value, point out it’s a stream, and see how many lines end up coming back in the “chunk”.

Splitting by \n gives us 1 to N lines from the server, and any trailing characters after the \n stick around for the next iteration of this self-recursing read() function to deal with.

for (const line of lines) {

if (!line.trim()) continue;

// Strip RS (0x1E) off the beginning

const clean = line.replace(/^\u001E/, '').trim();

try {

const feature = JSON.parse(clean);

if (feature.type === 'Feature') {

featureCollection.features.push(feature);

map.getSource('streamSource').setData(featureCollection);

}

} catch (e) {

console.error('Bad GeoJSON feature:', e, line);

}

}

return read();

Here’s the real crux of it all. For each line in the chunk, we can skip empty lines, and strip off RS characters, then remove whitespace. Finally with all that done we should have a clean JSON object to work with, so let’s parse that into a JS object and look at what we’ve got.

Every new Feature can get pushed straight into featureCollection.features, and then map.getSource('streamSource').setData(featureCollection); does all the magic of updating the map view.

If you put that altogether, you are rewarded with an amazing smooth visual loading experience as each tree is popped onto the map one at a time.

Testing

No work is complete until some tests have been put in place, and if Laravel PHP is the API backend then let’s test that.

<?php

declare(strict_types=1);

use App\Models\Parcel;

use App\Models\Unit;

use Illuminate\Support\Facades\Bus;

use MatanYadaev\EloquentSpatial\Objects\Polygon;

// A handy helper for converting any data to a json-seq string

function json_seq_encode(mixed $data) {

return chr(0x1E).json_encode($data).chr(0x0A);

}

it('always succeeds and streams each unit available', function (Parcel $parcel) {

$response = $this->get('/parcel/' . $parcel->id);

// Will return 200 regardless of how many units

$response->assertStatus(200);

// Loop through each unit and assert its presence in the streamed content

$streamedItems = $parcel->units()->get()->map(function ($unit) {

return json_seq_encode([

'type' => 'Feature',

'geometry' => $unit->coordinates->toArray(),

'properties' => [

'id' => $unit->id,

'type' => $unit->unit_type->value,

'species' => $unit->specie->name,

],

]);

});

return $response->assertStreamedContent(implode('', $streamedItems->toArray()));

})->with([

'0 units' => fn () => Parcel::factory()->create(),

'5 units' => function () {

return Parcel::factory()

->has(Unit::factory(5)->tree())

->create();

},

]);

Not too shabby! This will make sure the endpoint works if theres only one parcel or if there’s multiple, with the help of assertStreamedContent() in Laravel’s HTTP Testing framework.

Performance & Timing

One last thing we should do now that it’s all working nicely is take a look at the performance of it all. Is this quicker, slower, or some third thing?

There are a few different measurements that are relevant to this the response time here.

- Waiting - The server has acknowledged the request and is working on a reply, but the response has not started.

- Sending - The response has begun, so the timer has started, and it will end when the response is finished.

Let’s go through the before, the after, and the after-after when I added Cache-Control headers.

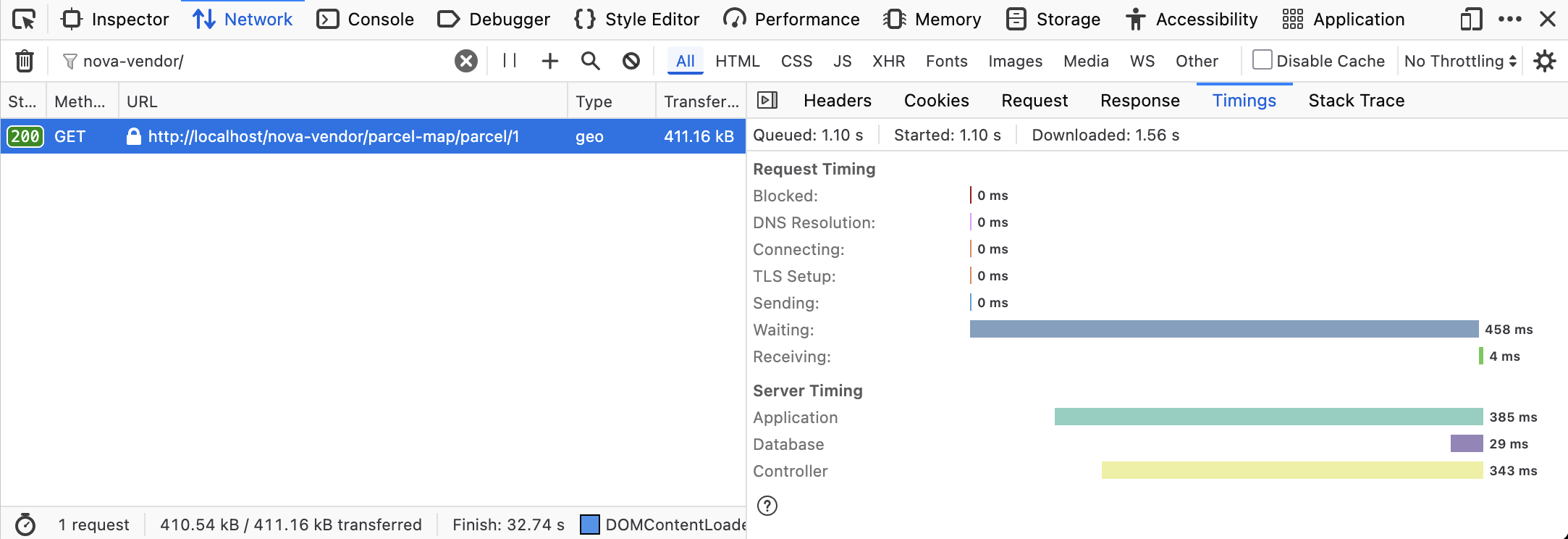

Single GeoJSON Response (Not Streamed)

458ms of waiting! That’s a lot of waiting. This is not even the largest site, only 3,000 trees, so if it was any bigger… ooh heck.

The majority of the time spent here is on the waiting. Nothing for the client do but wait. As soon as the server is done producing all that JSON it only takes 4ms to return it all, and the client then suddenly needs to start throwing all the pins onto the map all at once.

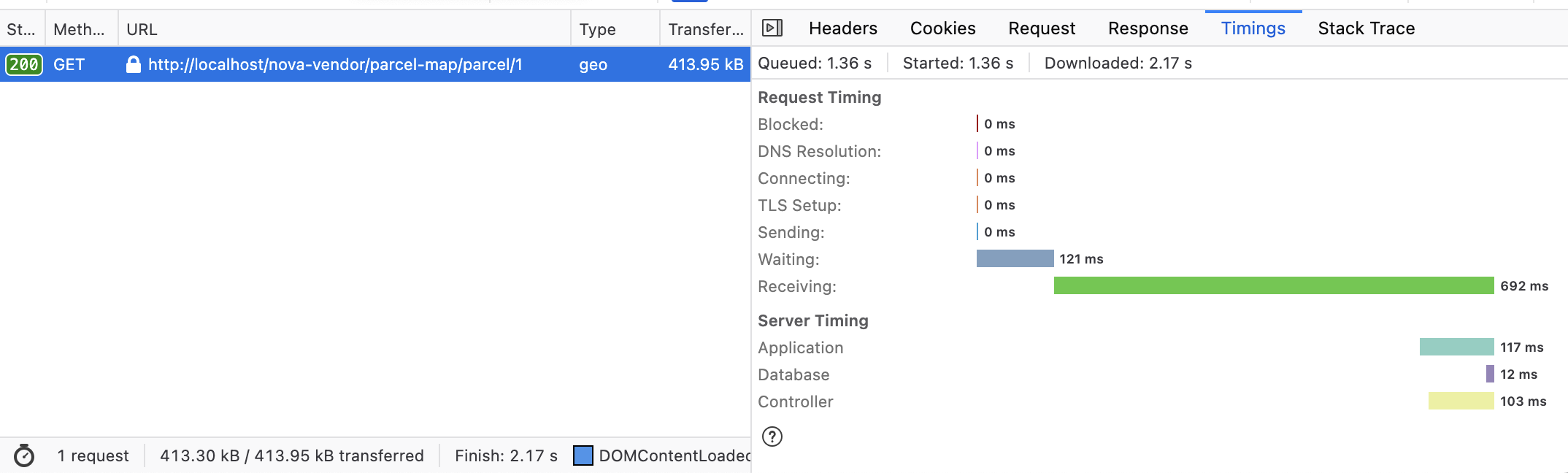

Streamed GeoJSON Sequence

Immediately we see the waiting time has shot right down from 458ms to 121ms, what a win!

The receiving has actually gone up a little, but that’s to be expected as it’s looping through a bunch of things and doing a JSON encode each line. The received time could tweaked and benchmarked with send 5-10 lines at a time to see if that helps, and there are usually faster JSON encoders around than the core language one, but a little bit of extra time is fine with me.

The important thing here is that it started getting results quickly, and helped the client start drawing information earlier, instead of nothing then smack.

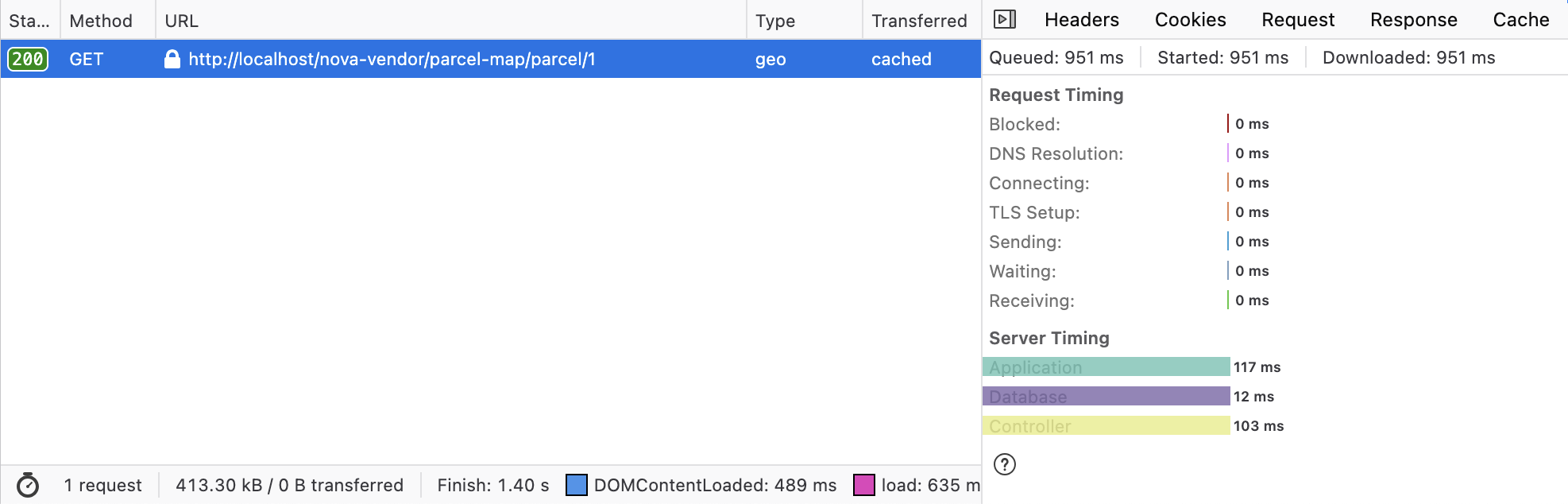

Streamed GeoJSON Sequence with Cache Headers

Streams can be cached! Remember, a stream is just a response that the client can optionally accept one chunk at a time, but if the client already has the response then it can reuse that. The quickest response is always for the request you didn’t make!

There we go. 0ms. Always favourite response time.

Summary

A lot of ground has been covered in this post, and not just with trees!

We’ve learned how that JSON Streaming exists, how it works, how it can be used for GeoJSON conceptually, and looked through some code examples in PHP (server-side) and JavaScript (client-side), but plenty of other languages exist. You could see if there are libraries in your language(s) of choice, to see if you can skip getting too hands on with the control characters, because I was certainly a little sloppy in respecting every possible thing the RFC wants me to handle.

Streaming is a lot of fun, and GIS is a lot of fun, so combining the two has been a brilliant ride.

Share a comment with us to let us know what you’re using GeoJSON for, and if streaming might help.